Nvidia’s H100 AI chip has been instrumental in the company’s success, propelling it to multitrillion-dollar status, potentially surpassing competitors like Alphabet and Amazon. However, Nvidia is now introducing the new Blackwell B200 GPU and GB200 “superchip” to further solidify its lead in the market.

Nvidia CEO Jensen Huang presents the new GPU alongside the H100 during the GTC livestream. Image: Nvidia

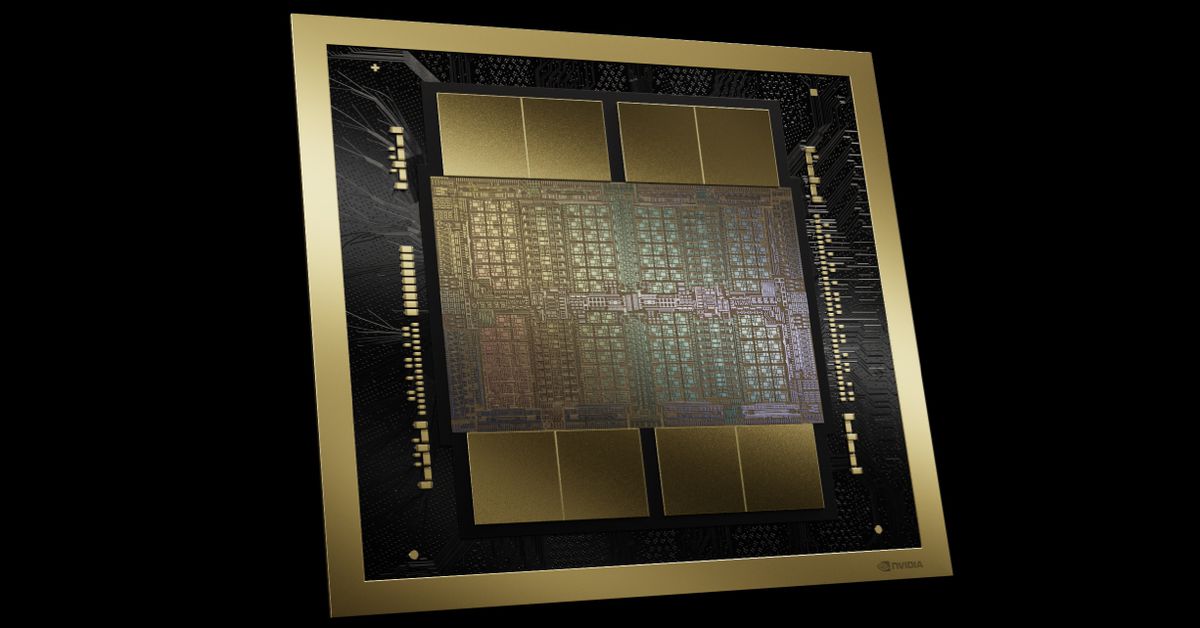

Nvidia claims that the new B200 GPU can provide up to 20 petaflops of FP4 horsepower from its 208 billion transistors. Additionally, combining two of these GPUs with a single Grace CPU in a GB200 configuration can deliver 30 times the performance for LLM inference workloads, while being significantly more efficient, reducing cost and energy consumption by up to 25x compared to the H100.

Nvidia states that training a 1.8 trillion parameter model previously required 8,000 GPUs and 15 megawatts of power, but now only 2,000 Blackwell GPUs are needed while consuming just 4 megawatts.

When benchmarked against GPT-3 LLM with 175 billion parameters, Nvidia claims that the GB200 offers seven times the performance of an H100 and 4 times the training speed.

Visualization of a single GB200 unit consisting of two GPUs, one CPU, and one board. Image: Nvidia

Nvidia highlights a second-gen transformer engine in the GB200 that doubles compute, bandwidth, and model size by using four bits per neuron, resulting in the 20 petaflops of FP4 performance. Additionally, the next-gen NVLink switch allows seamless communication between 576 GPUs with 1.8 terabytes per second of bidirectional bandwidth.

To accommodate the increased computing power, Nvidia developed a new network switch chip with 50 billion transistors and 3.6 teraflops of FP8 onboard compute. Previously, Nvidia notes that clusters of 16 GPUs would spend the majority of their time communicating rather than computing.

Nvidia introduces both FP4 and FP6 capabilities with the Blackwell series. Image: Nvidia

Nvidia anticipates high demand for the GB200 superchips and offers large-scale supercomputer-ready configurations like the GB200 NVL72, which combines CPUs and GPUs for enhanced AI training performance. The system boasts impressive computing power and connectivity.

The GB200 NVL72 configuration featuring a liquid-cooled rack. Image: Nvidia

Each rack houses various GB200 chips and NVLink switches to support extensive model parameters. Nvidia indicates that one such rack can accommodate a 27-trillion parameter model, potentially exceeding the capacity of the rumored GPT-4 model.

Major tech companies like Amazon, Google, Microsoft, and Oracle plan to integrate the NVL72 racks into their cloud services, signaling widespread adoption of Nvidia’s advanced technology.

Nvidia also offers comprehensive solutions like the DGX Superpod for DGX GB200, combining multiple systems for enhanced computing capabilities in a single setup.

Nvidia emphasizes the scalability of its systems, capable of integrating tens of thousands of GB200 superchips interconnected through advanced networking technology for optimal performance.